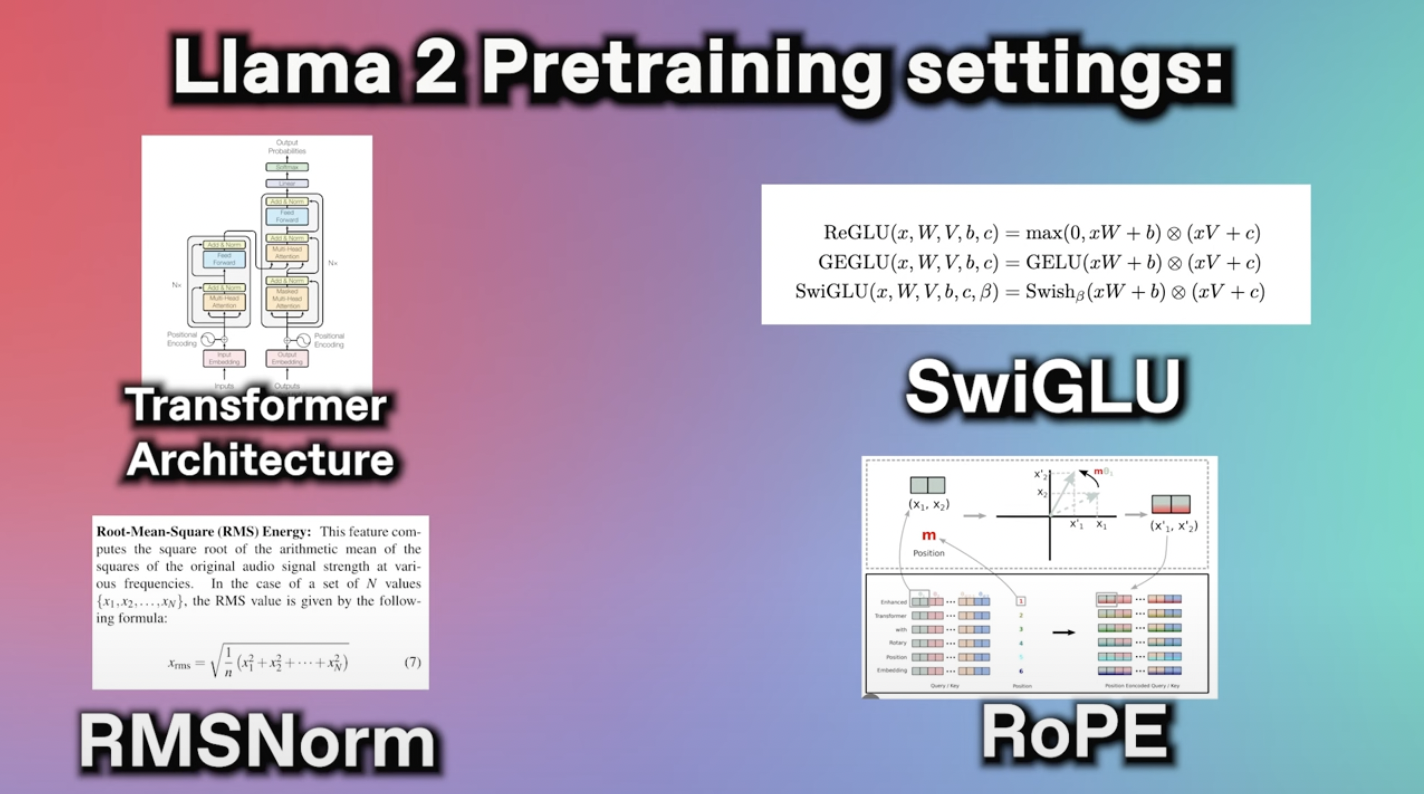

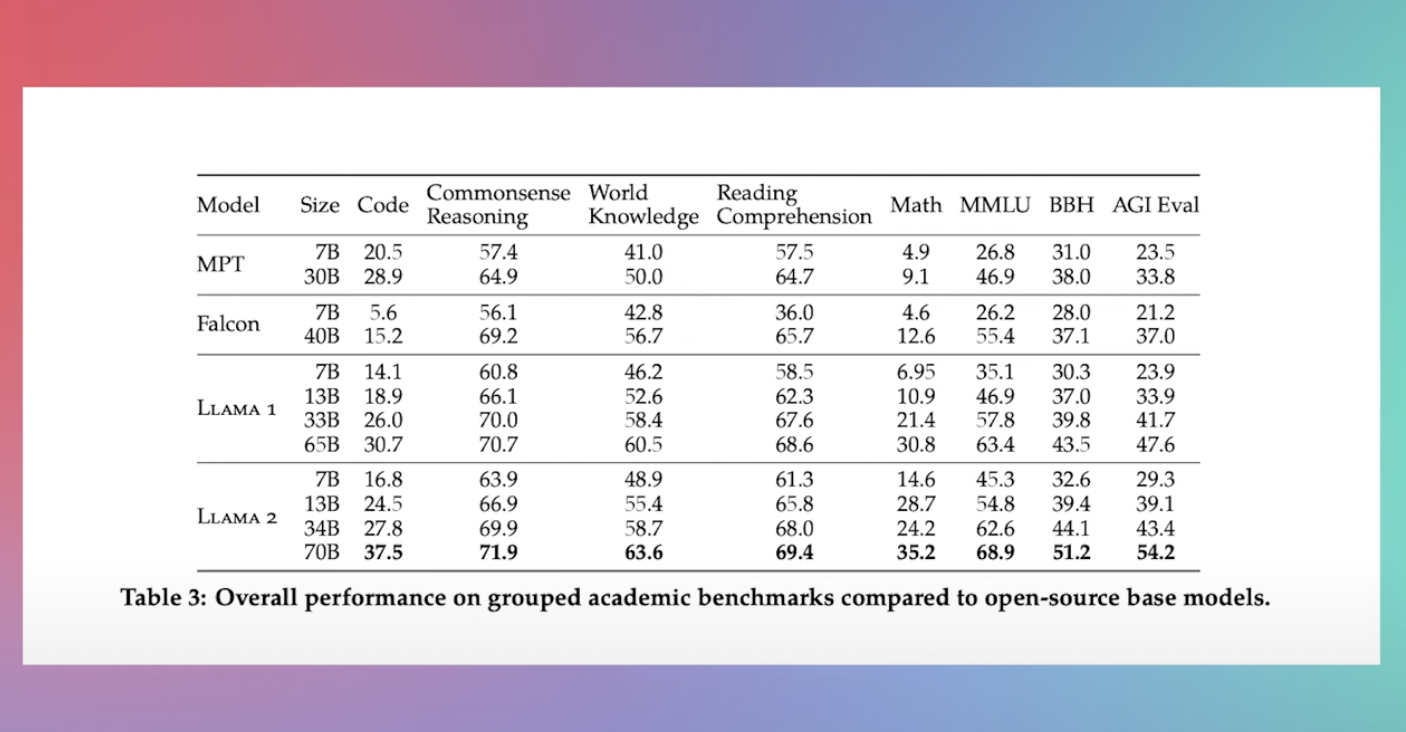

Llama-2 much like other AI models is built on a classic Transformer Architecture To make the 2000000000000 tokens and internal weights easier to handle Meta. In this work we develop and release Llama 2 a collection of pretrained and fine-tuned large language models LLMs ranging in scale from 7 billion to 70 billion parameters. Llama 2 is a collection of pretrained and fine-tuned large language models LLMs ranging in scale from 7 billion to 70 billion parameters Their fine-tuned LLMs called Llama 2-Chat. Llama 2 is a family of pre-trained and fine-tuned large language models LLMs ranging in scale from 7B to 70B parameters from the AI group at Meta the parent company of Facebook. LLAMA 2 Full Paper Explained 4 waiting Scheduled for Jul 19 2023 llm ai..

Ai Breakdown Or Takeaways From The 78 Page Llama 2 Paper Deepgram

Chat with Llama 2 70B Customize Llamas personality by clicking the settings button I can explain concepts write poems and code solve logic puzzles or even name your. Experience the power of Llama 2 the second-generation Large Language Model by Meta Choose from three model sizes pre-trained on 2 trillion tokens and fine-tuned with over a million human. Llama 2 was pretrained on publicly available online data sources The fine-tuned model Llama Chat leverages publicly available instruction datasets and over 1 million human annotations. With more than 100 foundation models available to developers you can deploy AI models with a few clicks as well as running fine-tuning tasks in Notebook in Google Colab. Llama 2 is a family of state-of-the-art open-access large language models released by Meta today and were excited to fully support the launch with comprehensive integration..

Llama 2 is a collection of pretrained and fine-tuned generative text models ranging in scale from 7 billion to 70 billion parameters This is the repository for the 7B pretrained model converted for the. All three model sizes are available on HuggingFace for download Llama 2 models download 7B 13B 70B Ollama Run create and share large language models with Ollama. The files a here locally downloaded from meta Checklistchk consolidated00pth paramsjson Now I would like to interact with the model. Lewtun Lewis Tunstall Introduction Llama 2 is a family of state-of-the-art open-access large language models released by Meta today and were excited to fully support the. One option to download the model weights and tokenizer of Llama 2 is the Meta AI website Before you can download the model weights and..

Ai Breakdown Or Takeaways From The 78 Page Llama 2 Paper Deepgram

Introduction In this blog post we will look at how to fine-tune Llama 2 70B using PyTorch FSDP and related best practices We will be leveraging Hugging Face Transformers. Understanding Llama 2 and Model Fine-Tuning Llama 2 is a collection of second-generation open-source LLMs from Meta that comes with a commercial license It is designed to handle a wide. We made possible for anyone to fine-tune Llama-2-70B on a single A100 GPU by layering the following optimizations into Ludwig. We were able to fine-tune LLaMA 2 - 70B Model on Dolly v2 Dataset for 1 epoch for as low as 1925 using MonsterTuner The outcome of fine-tuning using Monster API for the. FSDP Fine-tuning on the Llama 2 70B Model For enthusiasts looking to fine-tune the extensive 70B model the low_cpu_fsdp mode can be activated as follows..

Comments